SGRACE (Scalable Graph Attention and Convolutional Network Engine)

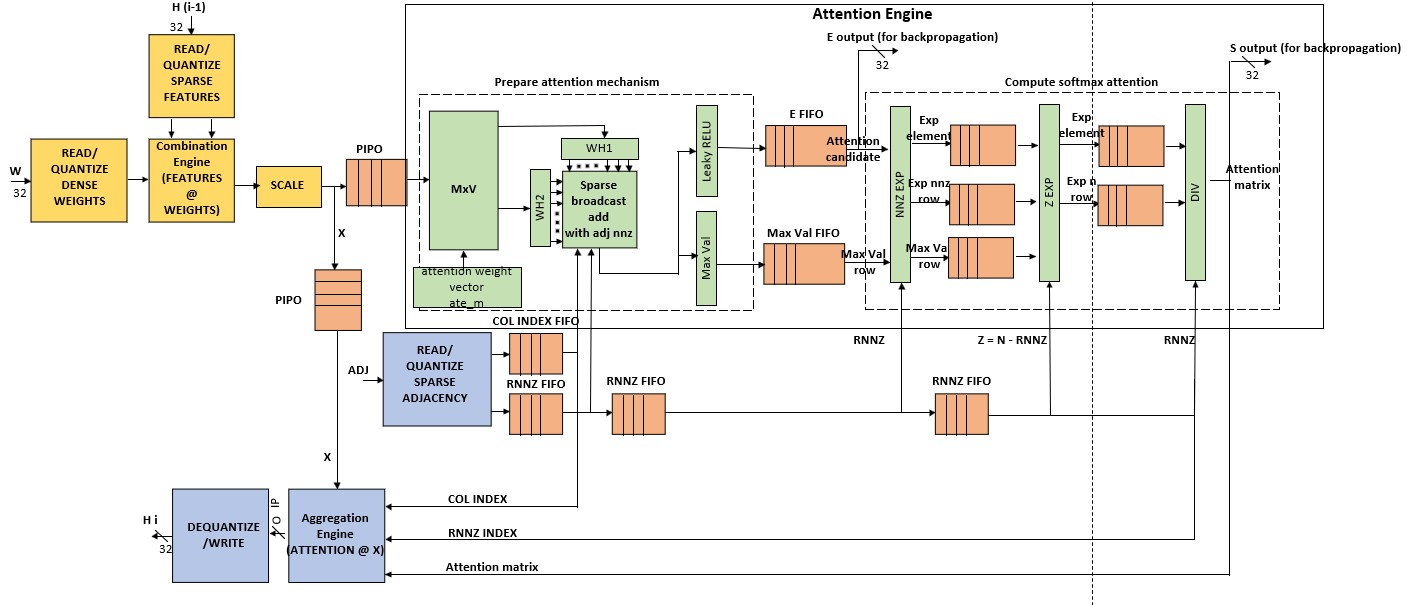

SGRACE is a hardware accelerator for on-device inference and training of deeply quantized graph convolutional networks (GCN) and graph attention networks (GAT). The architecture unifies in a single dataflow both GAT and GCN support without breaking the matrix global formulation needed for high performance.

SGRACE Architecture.

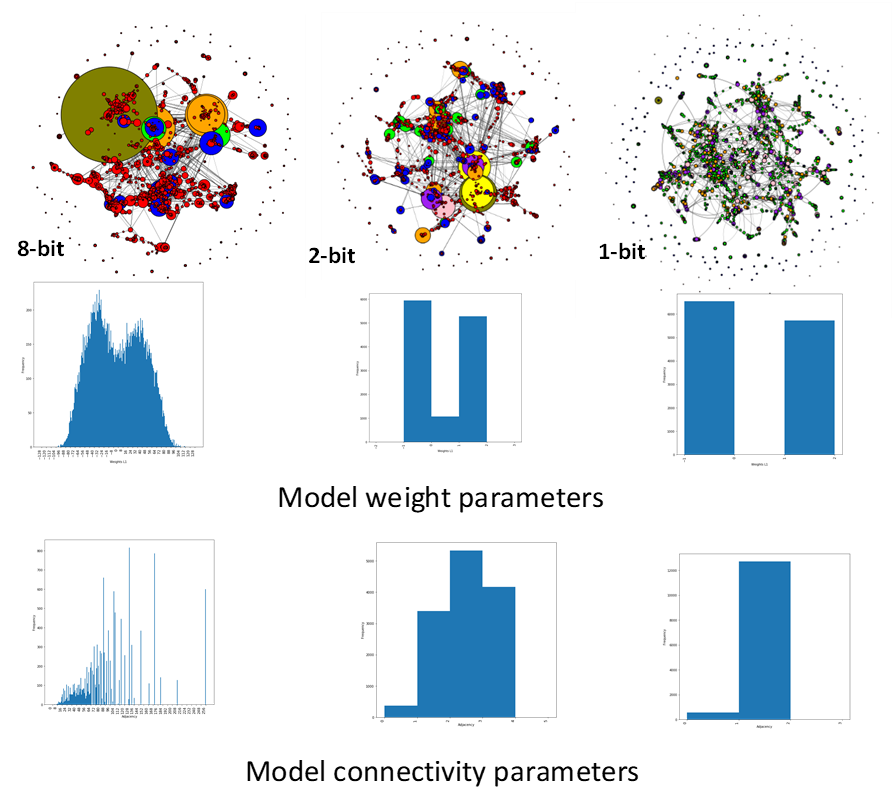

SGRACE Adaptive Quantization and prunning.

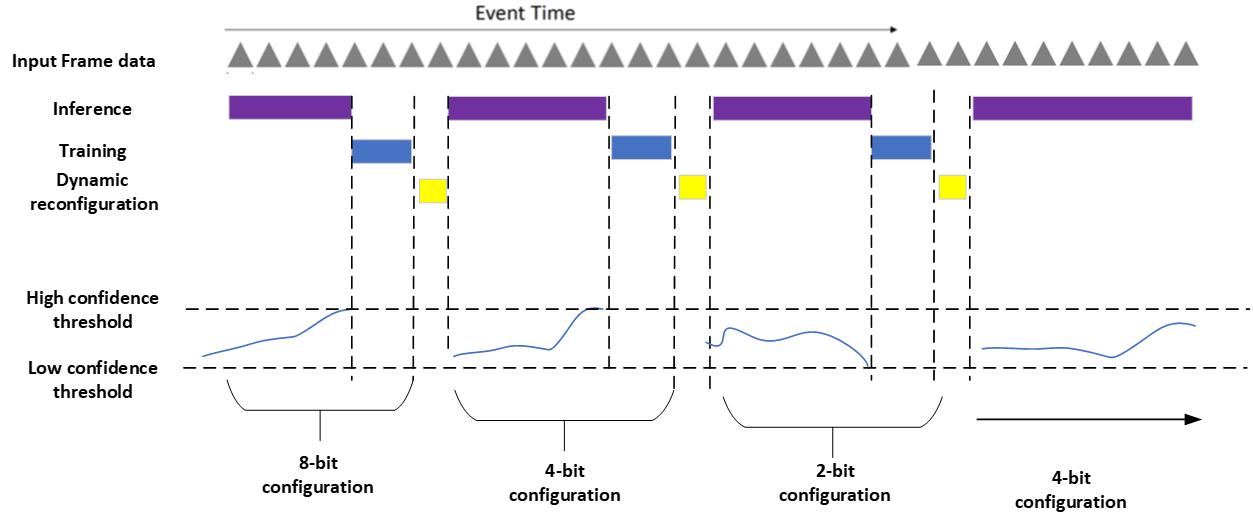

SGRACE Continual adaptation and learning.

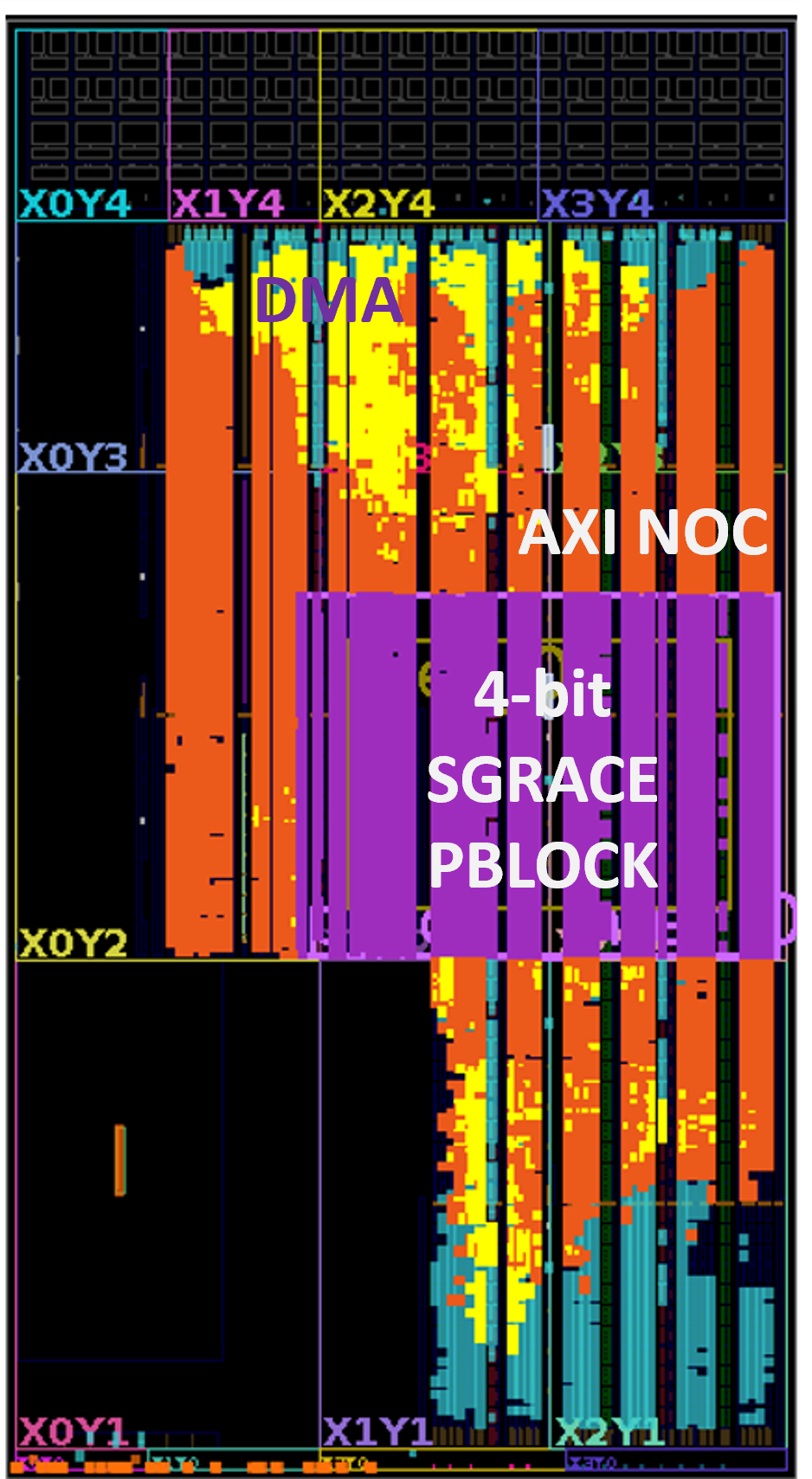

SGRACE targets Zynq and Versal MPSoC devices and it adapts its internal configuration according to the precision requirements determined at run-time via network confidence measurements. Simpler data uses a lower precision resulting in less logic with higher performance and lower complexity. This means that its configuration and parameters are being continually adapted depending on system state, input data and task complexity

Check the research articles on SGRACE

J. Nunez-Yanez and H. Mousanejad Jeddi' SGRACE: Scalable Architecture for On-Device Inference and Training of Graph Attention and Convolutional Networks', IEEE Transactions on Very Large Scale Integration (VLSI) Systems, doi: 10.1109/TVLSI.2025.3591522.Habib Taha Kose, Jose Nunez-Yanez, Robert Piechocki, James Pope, , >A Survey of Computationally Efficient Graph Neural Networks for Reconfigurable SystemsInformation 15:377 (2024),https://doi.org/10.3390/info15070377

SGRACE research is sponsored by the WASP foundation in Sweden. Check the github

SGRACE project'

SGRACE Dynamic Function Exchange.